Series of experiments conducted for SIP for GPT

| Paper | Summary |

|---|---|

| Prefix-Tuning | train a tokens while freezing the parameters |

| P-Tuning v2 | P-Tuning is not adequate for small-size models. P-Tuning V2 is an implementation of Deep Prompt Tuning |

| GPT Understands, Too | P-tuning employs trainable continuous prompt embeddings |

| Prompt Tuning | Add additional prompts to be trained. |

An example of data set is:

The prompt is the form of Tweet text : <id> <text> Label : <label>

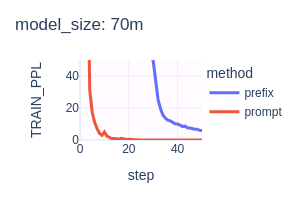

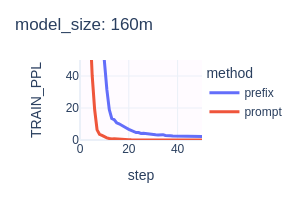

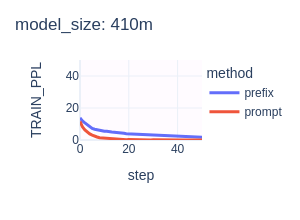

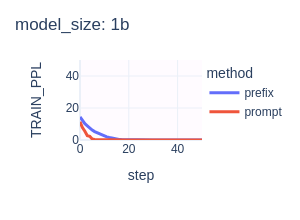

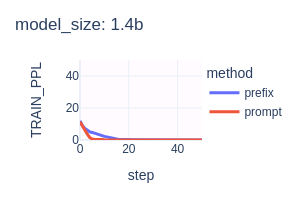

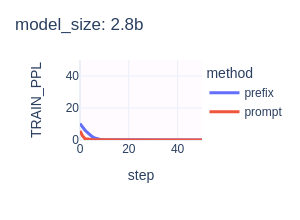

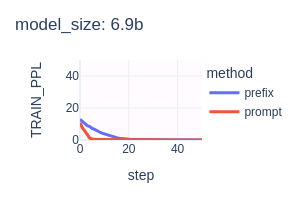

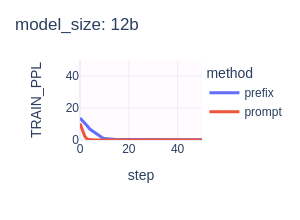

We follow Prompt tuning in PEFT with Pythia models.

Query: Tweet text : @openai No one knows the source of AI, but GPT can Label :

Answers:

| Model Size | Try 1 | Try 2 | Try 3 |

|---|---|---|---|

70m |

no complaintdescribe | no complaintdescribe | complaints2 |

160m |

no complaintGeorg | no complaintogy | no complaintogy |

410m |

no complaint• | feature_class | Tweet. |

1b |

no complaint”, | no complaint | no complaint |

1.4b |

no complaint | complaint | no complaint |

2.8b |

no complaint | no complaint | no complaint |

6.9b |

no complaint | no complaint | no complainttext |

12b |

complaint | complaint | complaint |

Query: Tweet text : @openai No one knows the source of AI, but GPT can Label :

Answers:

| Model Size | Try 1 | Try 2 | Try 3 |

|---|---|---|---|

70m |

no trace escape | no complaint | ictelinate |

160m |

nocome no | no’s@ | noentityentity |

410m |

no complaint | no complaint- | no complaint |

1b |

complaint | complaint | complaint |

1.4b |

no complaint | no complaint | no complaintno |

2.8b |

complaint | no complaint | no complaint |

6.9b |

no complaint | no complaint | no complaint |

12b |

no complaint | no complaint | no complaint |