Identifying the Source of Generation for Large Language Models [ICPRAI 2024]

This work introduces token-level source identification in the decoding step, which maps the token representation to the reference document. We propose a bi-gram source identifier which has two successive token representations as input.

This post is a brief summary of the paper

“Identifying the Source of Generation for Large Language Models”:.

- 🆒 Code is available at Github:fxnnxc/source_identification_of_llm

- This work is accepted at ICPRAI 2024

Problem Statement

Humans memorize text they seen and use the knowledge in future for any purpose. Similarly, LLMs memorize documents for the purpose of language modeling. This process is can be viewed as “statistical modeling” of real world text distribution.

A different between human and LLM is that we learn from text and remember the location of text such as news, books, and websites. However, LLMs do not memorize the source of text as the only information given is the text.

Learning text distribution is already hard work

This work reveals that the inference of document label from the hidden representation of GPT is a trainable connection of two distributions, hiddens and labels.

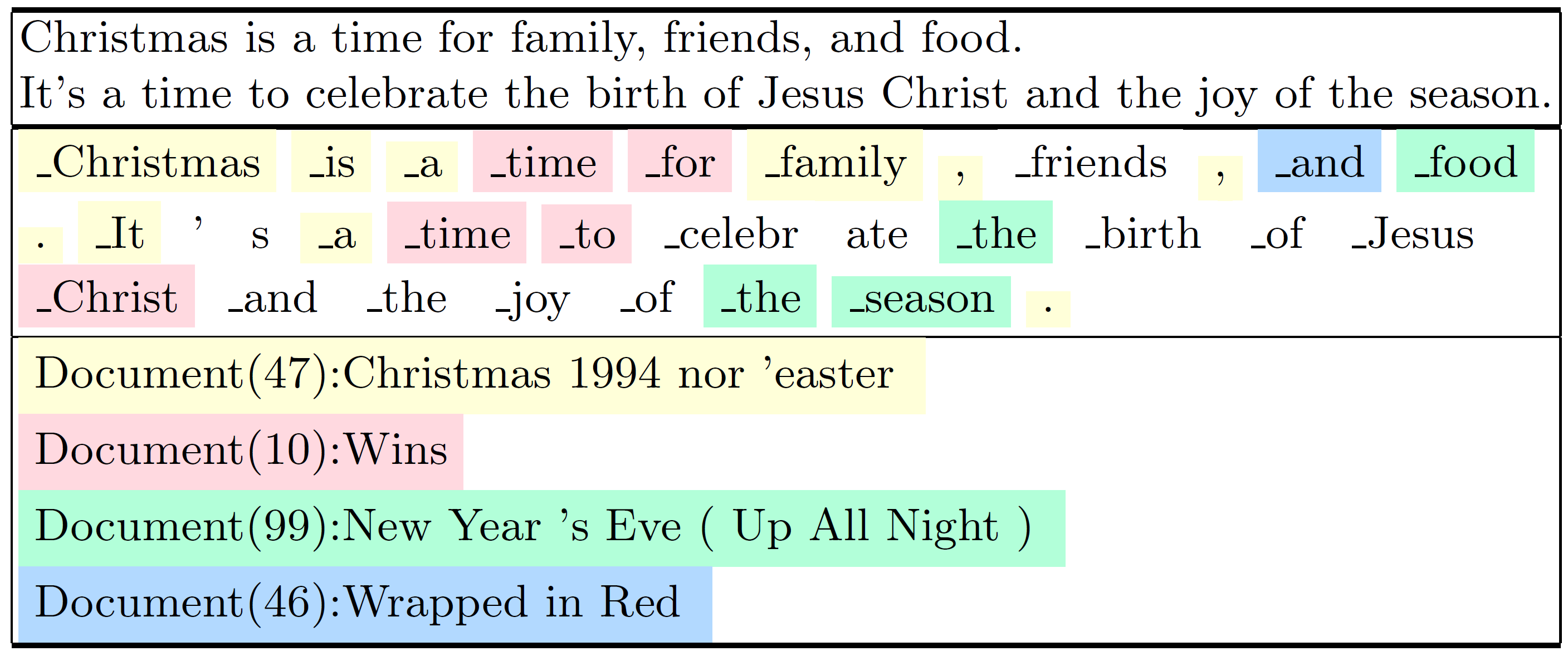

Here is an example of the source identification from Llama2 model.

Proposed Approach

The proposed method includes the two steps.

- GPT is trained to memorize documents

This work assumes that the Wiki pages are already memorized in the pretrained state of open sourced LLMs . - A source identifier, which is a multi-layer MLP is trained to predict the labels from the hidden representation of tokens.

Proposed Methods

Our prediction problem is a token-level rather than sentence-level. That means, we predict labels at each token location. One important property of GPT is that the distribution of the next word is combined over several documents. As such, a single sentence generated by GPT is a mixture of documents at every token location. We contribute by proposing

- Token-level identification of documents

- Bi-gram representation of predict token-level document.

One interesting observation is that the bigram representation generalize better than unigram or trigram representations. This observation shed light on the possibility of token-level source identification of AI generation.

The paper includes more experiments and discussion on the token-level prediction.

Conclusion

AI Generation shows remarkable progress in recent years including Llama3, Claude3,… etc. As the AI models learns from data provided by developers, we need to leave a tag to trace the data origin and add other information for safety, such as watermarks. This work contribute to the AI safety community by investigating the hidden representation of LLMs for the purpose of tracking origin.

How to Cite

TBD

- Contact : bumjin@kaist.a.kr