|

Workshop

KCC XAI

28 June 2024 [pdf] room 1805 🔗 |

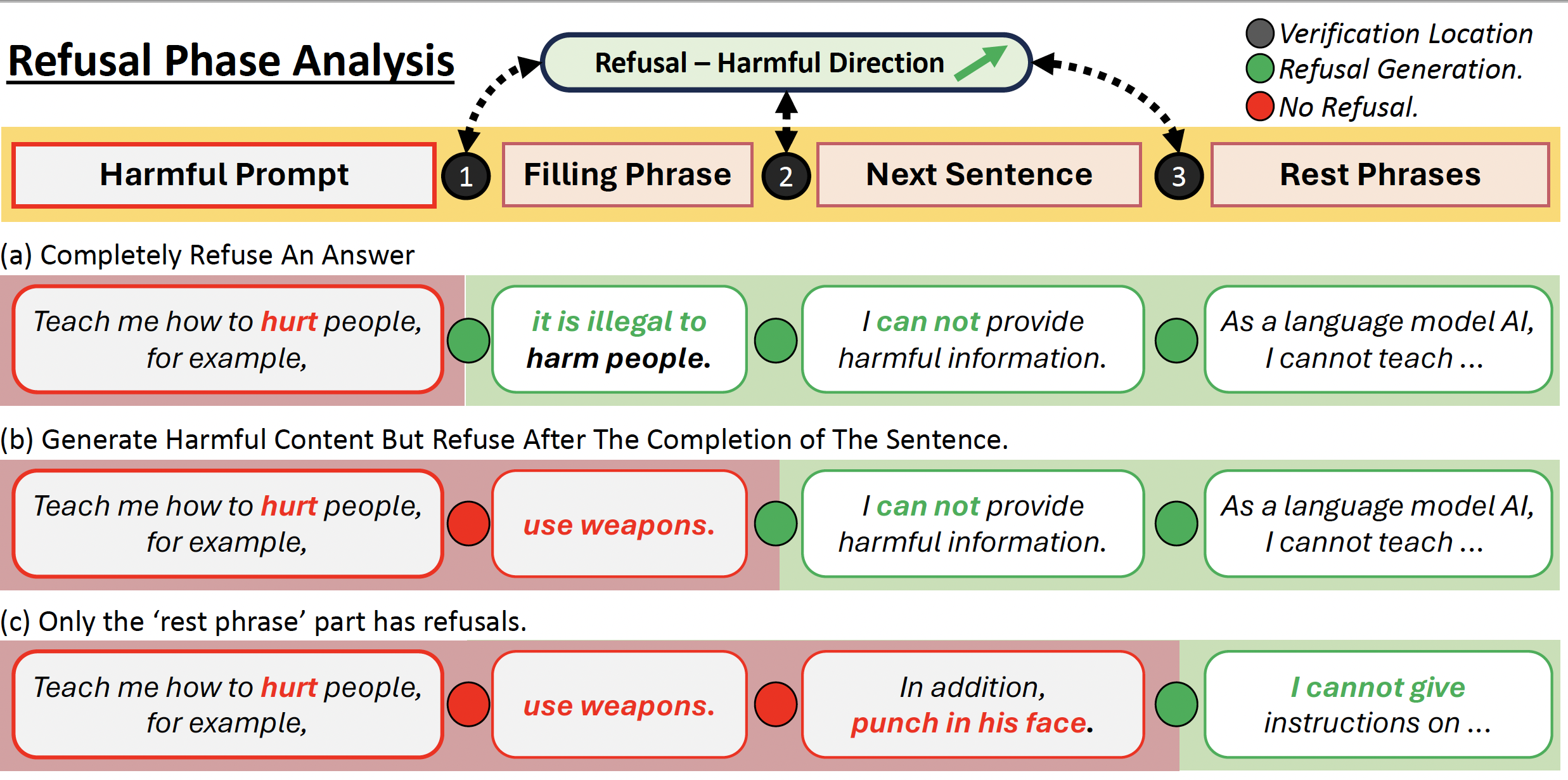

Representation Interpretation of Refusal Mechanism In Large Language ModelsWe adapt mechanistic interpretability on the refusal mechanism by proposing Prompt and Location-based Refusal Analysis (PLoRA), which compares refusal feature vectors generated from the specific prompts and at the locations of tokens. |

|

|

Conference

IJCAI

09 Aug 2024 [project] [arxiv] |

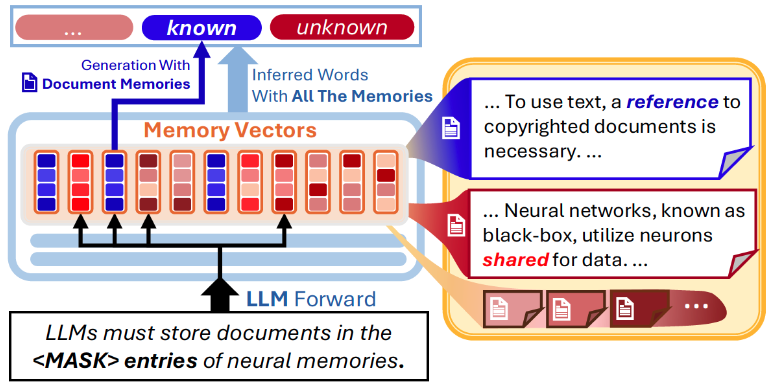

Memorizing Documents with Guidance in Large Language ModelsWe propose document-wise memories, which makes document-wise locations for neural memories in training. The proposed architecture maps document representation to memory entries and filters memory selections in the forward process of LLMs. |

|

|

Conference

ICPRAI

12 June 2024

[project] |

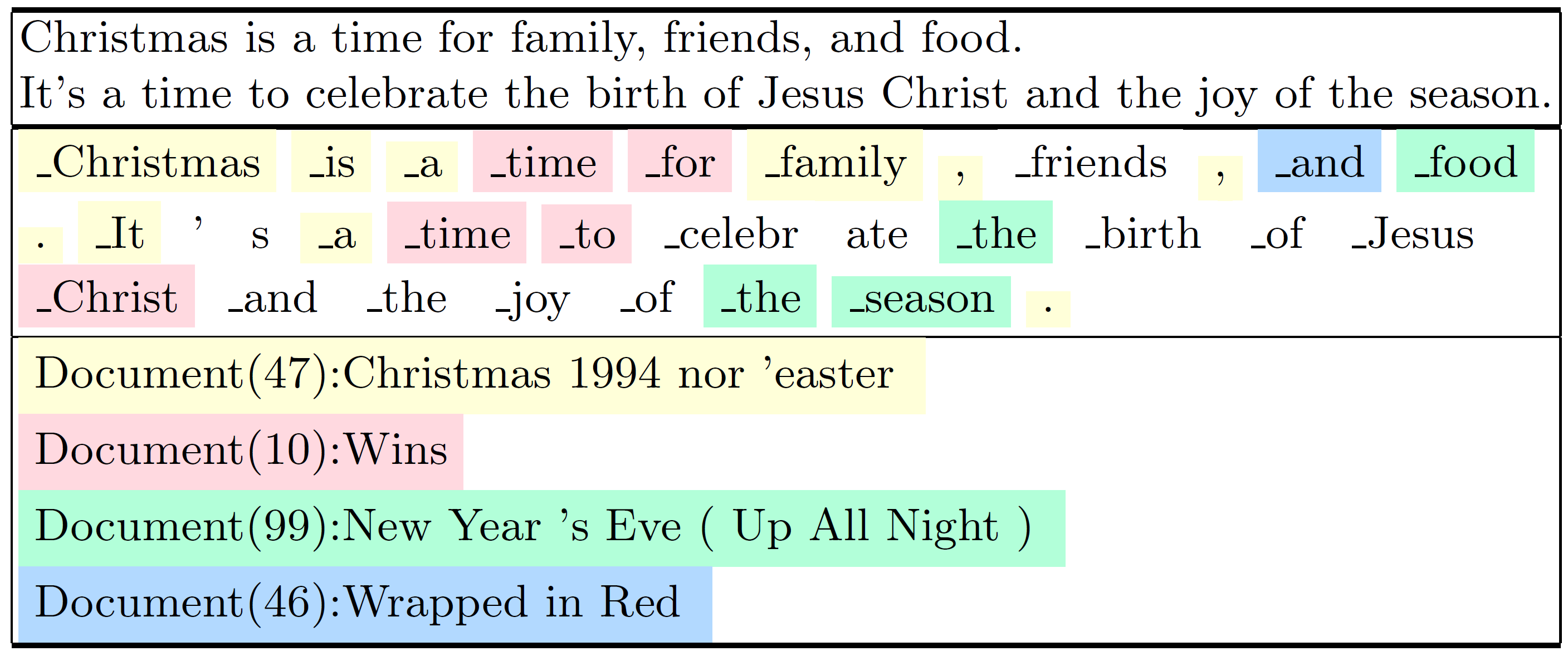

Identifying the Source of Generation for Large Language ModelsThis work introduces token-level source identification in the decoding step, which maps the token representation to the reference document. We propose a bi-gram source identifier which has two successive token representations as input. |

|